Example Squid Deployment: WikiMedia

(Last Updated: January 2007)

Wikimedia began using Squid in 2004 to reduce the load on their web servers with almost immediate positive results. As a consequence, Wikimedia have been instrumental in helping Squid developers track down and fix important performance and HTTP compliance bugs. Wikimedia today test the latest release and pre-release code under real-world conditions and allow the Squid developers to produce high-quality open-source software which everyone can benefit from.

Wikimedia operate more than 50 Squid servers in 3 locations around the world (Florida, Amsterdam and Korea) serving almost three gigabits of Wiki traffic at peak times. (See this link for traffic and request statistics.)

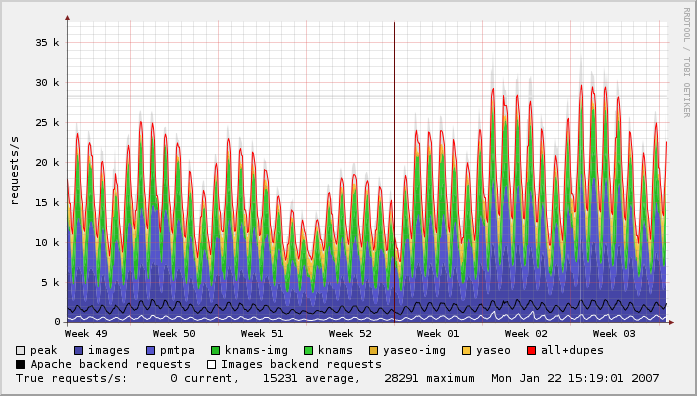

The following graph has been taken from the above site and shows the request load over a 7 week period ending Week 3 2007. Take a close look at the Apache and Image backend requests compared to the served request rates.

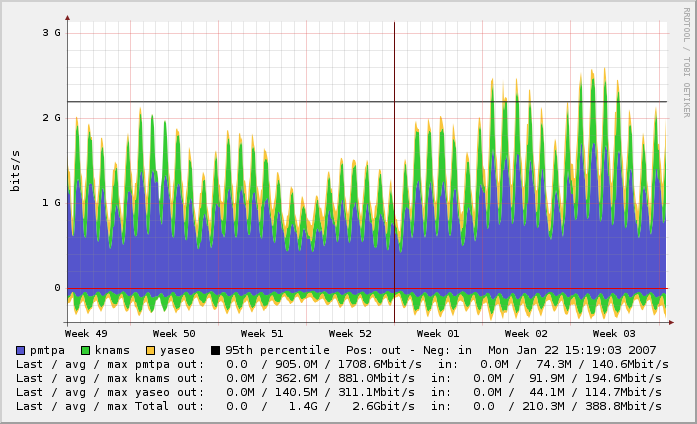

The following graph illustrates the total traffic flow served over the same seven-week period.

Further information can be found at http://meta.wikimedia.org/wiki/Wikimedia_servers. Please note that this site is probably already out of date as Wikimedia is growing at a very fast rate; please browse the rest of the meta.wikimedia.org site for more information.

Introduction

- About Squid

- Why Squid?

- Squid Developers

- How to Donate

- How to Help Out

- Getting Squid

- Squid Source Packages

- Squid Deployment Case-Studies

- Squid Software Foundation

Documentation

- Quick Setup

- Configuration:

- FAQ and Wiki

- Guide Books:

- Non-English

- More...

Support

- Security Advisories

- Bugzilla Database

- Mailing lists

- Contacting us

- Commercial services

- Project Sponsors

- Squid-based products

Miscellaneous

- Developer Resources

- Related Writings

- Related Software:

- Squid Artwork

Web Site Translations

Mirrors

- Website:

- gr il pl ... full list

- FTP Package Archive